Guys,

Here I am gonna show how we can run Map Reduce program in ubuntu linux.

Pre-requisites:

1. Hadoop should be installed successfully.

2. Hadoop is in running mode. (bin/hadoop start-all.sh)

Here we go:

1. Create a Any directory in Ubuntu as below:

admin@admin-G31M-ES2L:/usr$ mkdir testjars

2. Now change the directory to newly created one.

admin@admin-G31M-ES2L:/usr$ cd testjars

3. Create Input File as Input.txt and copy paste some Text into it.

admin@admin-G31M-ES2L:/usr/testjars$ vi input.txt

4. Now Move this directory to Hadoop HDFS.

admin@admin-G31M-ES2L:/usr/local/hadoop $ bin/hadoop dfs -put /usr/testjars/ /usr/testjar

5. So we have moved a directory which is having input file to process by map reduce job to Hadoop HDFS.

Note: In order to run word count map reduce job the input file needs to be placed in HDFS. So we have done this just now.

6. Word count jar is available in "hadoop-examples-1.0.4.jar" under hadoop installation directory.

7.Now we have everything ready to run word count map-reduce jar file.

let's start running it.

admin@admin-G31M-ES2L:/usr/local/hadoop$ bin/hadoop jar hadoop-examples-1.0.4.jar wordcount /usr/testjars/input.txt /usr/testjars/output

13/11/05 12:38:25 INFO input.FileInputFormat: Total input paths to process : 1

13/11/05 12:38:25 INFO util.NativeCodeLoader: Loaded the native-hadoop library

13/11/05 12:38:25 WARN snappy.LoadSnappy: Snappy native library not loaded

13/11/05 12:38:25 INFO mapred.JobClient: Running job: job_201311051204_0009

13/11/05 12:38:26 INFO mapred.JobClient: map 0% reduce 0%

13/11/05 12:38:40 INFO mapred.JobClient: map 100% reduce 0%

13/11/05 12:38:52 INFO mapred.JobClient: map 100% reduce 100%

13/11/05 12:38:57 INFO mapred.JobClient: Job complete: job_201311051204_0009

13/11/05 12:38:57 INFO mapred.JobClient: Counters: 29

13/11/05 12:38:57 INFO mapred.JobClient: Job Counters

13/11/05 12:38:57 INFO mapred.JobClient: Launched reduce tasks=1

13/11/05 12:38:57 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=13948

13/11/05 12:38:57 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

13/11/05 12:38:57 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

13/11/05 12:38:57 INFO mapred.JobClient: Launched map tasks=1

13/11/05 12:38:57 INFO mapred.JobClient: Data-local map tasks=1

13/11/05 12:38:57 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=11056

13/11/05 12:38:57 INFO mapred.JobClient: File Output Format Counters

13/11/05 12:38:57 INFO mapred.JobClient: Bytes Written=3043

13/11/05 12:38:57 INFO mapred.JobClient: FileSystemCounters

13/11/05 12:38:57 INFO mapred.JobClient: FILE_BYTES_READ=4166

13/11/05 12:38:57 INFO mapred.JobClient: HDFS_BYTES_READ=3856

13/11/05 12:38:57 INFO mapred.JobClient: FILE_BYTES_WRITTEN=51505

13/11/05 12:38:57 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=3043

13/11/05 12:38:57 INFO mapred.JobClient: File Input Format Counters

13/11/05 12:38:57 INFO mapred.JobClient: Bytes Read=3745

13/11/05 12:38:57 INFO mapred.JobClient: Map-Reduce Framework

13/11/05 12:38:57 INFO mapred.JobClient: Map output materialized bytes=4166

13/11/05 12:38:57 INFO mapred.JobClient: Map input records=56

13/11/05 12:38:57 INFO mapred.JobClient: Reduce shuffle bytes=0

13/11/05 12:38:57 INFO mapred.JobClient: Spilled Records=562

13/11/05 12:38:57 INFO mapred.JobClient: Map output bytes=5583

13/11/05 12:38:57 INFO mapred.JobClient: CPU time spent (ms)=2550

13/11/05 12:38:57 INFO mapred.JobClient: Total committed heap usage (bytes)=177930240

13/11/05 12:38:57 INFO mapred.JobClient: Combine input records=499

13/11/05 12:38:57 INFO mapred.JobClient: SPLIT_RAW_BYTES=111

13/11/05 12:38:57 INFO mapred.JobClient: Reduce input records=281

13/11/05 12:38:57 INFO mapred.JobClient: Reduce input groups=281

13/11/05 12:38:57 INFO mapred.JobClient: Combine output records=281

13/11/05 12:38:57 INFO mapred.JobClient: Physical memory (bytes) snapshot=320823296

13/11/05 12:38:57 INFO mapred.JobClient: Reduce output records=281

13/11/05 12:38:57 INFO mapred.JobClient: Virtual memory (bytes) snapshot=1064452096

13/11/05 12:38:57 INFO mapred.JobClient: Map output records=499

admin@admin-G31M-ES2L:/usr/local/hadoop$

8. So Its ran successfully.

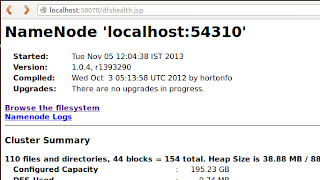

9. Now Lets check output in Namenode File system.

My namenode url is :

http://localhost:50070/

Below Screenshots will show you processed wordcount output file.

Here I am gonna show how we can run Map Reduce program in ubuntu linux.

Pre-requisites:

1. Hadoop should be installed successfully.

2. Hadoop is in running mode. (bin/hadoop start-all.sh)

Here we go:

1. Create a Any directory in Ubuntu as below:

admin@admin-G31M-ES2L:/usr$ mkdir testjars

2. Now change the directory to newly created one.

admin@admin-G31M-ES2L:/usr$ cd testjars

3. Create Input File as Input.txt and copy paste some Text into it.

admin@admin-G31M-ES2L:/usr/testjars$ vi input.txt

4. Now Move this directory to Hadoop HDFS.

admin@admin-G31M-ES2L:/usr/local/hadoop $ bin/hadoop dfs -put /usr/testjars/ /usr/testjar

5. So we have moved a directory which is having input file to process by map reduce job to Hadoop HDFS.

Note: In order to run word count map reduce job the input file needs to be placed in HDFS. So we have done this just now.

6. Word count jar is available in "hadoop-examples-1.0.4.jar" under hadoop installation directory.

7.Now we have everything ready to run word count map-reduce jar file.

let's start running it.

admin@admin-G31M-ES2L:/usr/local/hadoop$ bin/hadoop jar hadoop-examples-1.0.4.jar wordcount /usr/testjars/input.txt /usr/testjars/output

13/11/05 12:38:25 INFO input.FileInputFormat: Total input paths to process : 1

13/11/05 12:38:25 INFO util.NativeCodeLoader: Loaded the native-hadoop library

13/11/05 12:38:25 WARN snappy.LoadSnappy: Snappy native library not loaded

13/11/05 12:38:25 INFO mapred.JobClient: Running job: job_201311051204_0009

13/11/05 12:38:26 INFO mapred.JobClient: map 0% reduce 0%

13/11/05 12:38:40 INFO mapred.JobClient: map 100% reduce 0%

13/11/05 12:38:52 INFO mapred.JobClient: map 100% reduce 100%

13/11/05 12:38:57 INFO mapred.JobClient: Job complete: job_201311051204_0009

13/11/05 12:38:57 INFO mapred.JobClient: Counters: 29

13/11/05 12:38:57 INFO mapred.JobClient: Job Counters

13/11/05 12:38:57 INFO mapred.JobClient: Launched reduce tasks=1

13/11/05 12:38:57 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=13948

13/11/05 12:38:57 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0

13/11/05 12:38:57 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0

13/11/05 12:38:57 INFO mapred.JobClient: Launched map tasks=1

13/11/05 12:38:57 INFO mapred.JobClient: Data-local map tasks=1

13/11/05 12:38:57 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=11056

13/11/05 12:38:57 INFO mapred.JobClient: File Output Format Counters

13/11/05 12:38:57 INFO mapred.JobClient: Bytes Written=3043

13/11/05 12:38:57 INFO mapred.JobClient: FileSystemCounters

13/11/05 12:38:57 INFO mapred.JobClient: FILE_BYTES_READ=4166

13/11/05 12:38:57 INFO mapred.JobClient: HDFS_BYTES_READ=3856

13/11/05 12:38:57 INFO mapred.JobClient: FILE_BYTES_WRITTEN=51505

13/11/05 12:38:57 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=3043

13/11/05 12:38:57 INFO mapred.JobClient: File Input Format Counters

13/11/05 12:38:57 INFO mapred.JobClient: Bytes Read=3745

13/11/05 12:38:57 INFO mapred.JobClient: Map-Reduce Framework

13/11/05 12:38:57 INFO mapred.JobClient: Map output materialized bytes=4166

13/11/05 12:38:57 INFO mapred.JobClient: Map input records=56

13/11/05 12:38:57 INFO mapred.JobClient: Reduce shuffle bytes=0

13/11/05 12:38:57 INFO mapred.JobClient: Spilled Records=562

13/11/05 12:38:57 INFO mapred.JobClient: Map output bytes=5583

13/11/05 12:38:57 INFO mapred.JobClient: CPU time spent (ms)=2550

13/11/05 12:38:57 INFO mapred.JobClient: Total committed heap usage (bytes)=177930240

13/11/05 12:38:57 INFO mapred.JobClient: Combine input records=499

13/11/05 12:38:57 INFO mapred.JobClient: SPLIT_RAW_BYTES=111

13/11/05 12:38:57 INFO mapred.JobClient: Reduce input records=281

13/11/05 12:38:57 INFO mapred.JobClient: Reduce input groups=281

13/11/05 12:38:57 INFO mapred.JobClient: Combine output records=281

13/11/05 12:38:57 INFO mapred.JobClient: Physical memory (bytes) snapshot=320823296

13/11/05 12:38:57 INFO mapred.JobClient: Reduce output records=281

13/11/05 12:38:57 INFO mapred.JobClient: Virtual memory (bytes) snapshot=1064452096

13/11/05 12:38:57 INFO mapred.JobClient: Map output records=499

admin@admin-G31M-ES2L:/usr/local/hadoop$

8. So Its ran successfully.

9. Now Lets check output in Namenode File system.

My namenode url is :

http://localhost:50070/

Below Screenshots will show you processed wordcount output file.

Comments

Post a Comment

Your Comments are more valuable to improve. Please go ahead